|

I am a second year PhD student at the Georgia Institute of Technology majoring in computer science. I have been working with Wei Xu and Alan Ritter since my second undergraduate year. My research interests are in Natural Language Processing and Machine Learning, focusing on large language model (LLM) learning and generalizability. I am also interested in using LLMs for practical applications for social good, such as for misinformation detection and privacy risk detection. Email / CV / Github / Google Scholar / Twitter |

|

|

I'm interested in the learning and reasoning process of large language models. My previous projects have explored the generalizability and robustness of NLP systems in diverse semantic spaces containing misinformation, language model representations of neologisms emerging over time, and probabilistic reasoning of LLMs in real-world applications. |

|

|

|

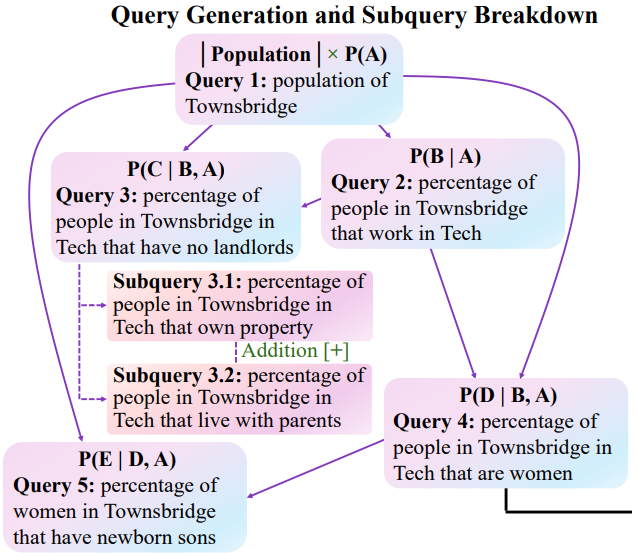

Jonathan Zheng, Sauvik Das, Alan Ritter, Wei Xu NeurIPS 2025 arXiv Privacy Risk Estimation is a new probablistic reasoning task that evaluates the capabilities of LLMs in using real world statistics to estimate the identification risk of user-generated documents containing privacy-sensitive information. |

|

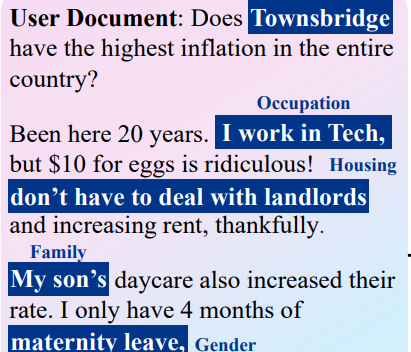

Jonathan Zheng, Alan Ritter, Wei Xu, ACL, 2024 arXiv Neo-Bench is a novel benchmark that evaluates the capabilities of LLMs in generalizing on new words that emerge over time.. |

|

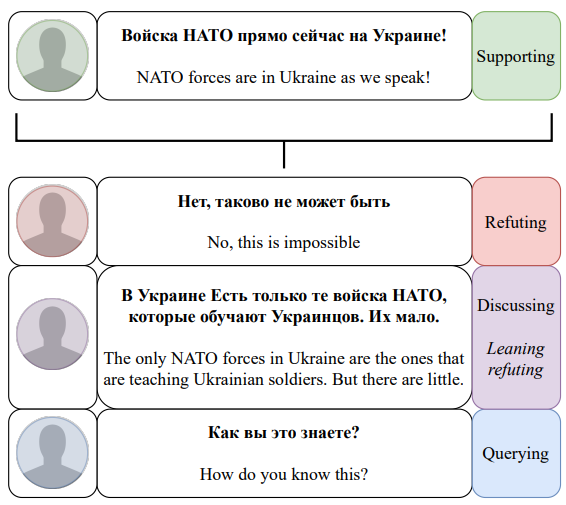

Anton Lavrouk, Ian Ligon, Tarek Naous, Jonathan Zheng, Alan Ritter Wei Xu, W-NUT, 2024 arXiv Stanceosaurus 2.0 extends the previous version by collecting Russian and Spanish tweets annotated with stance towards claims to combat misinformation online, especially for the ongoing conflict in Ukraine. |

|

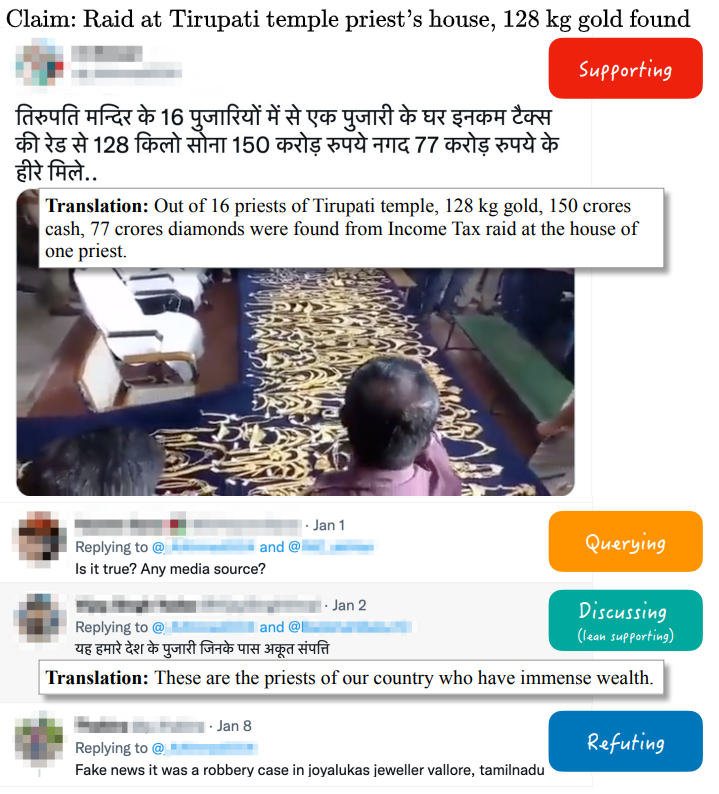

Jonathan Zheng, Ashutosh Baheti, Tarek Naous, Wei Xu, Alan Ritter EMNLP, 2022 arXiv Stanceosaurus is a large corpus of English, Hindi, and Arabic tweets annotated with stance towards claims to combat misinformation online. |